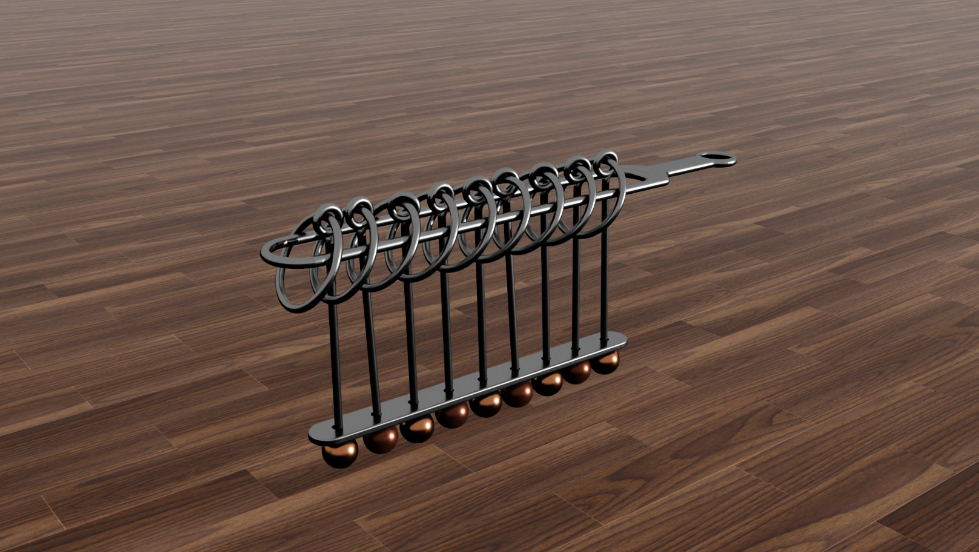

Nine Linked Rings

Project Log

The "Nine Linked Rings" puzzle (Chinese Ring Puzzle) is an ancient topological puzzle about removing all rings from a beam. Solving it as a human requires a decent amount of human dexterity, spatial awareness and a few basic logical insights.1 Due to its intricate rigid body dynamics, which are virtually unpredictable in practice, the system can derail into many conceptually different states which require different manipulation strategies to bring the system back into a canonical state. See Video 1 below to see a human hand solving the puzzle dealing with some of the derailments.

This makes it difficult, if not virtually impossible, to solve this practically unpredictable dynamical system with a robot in a hard-coded fashion, even with perfect 3D perception. For this reason the problem serves as an interesting research testbed to probe the limits of AI techniques such as motion planning, imitation learning and reinforcement learning. Although it can be done with two fingers only it requires a fair amount of dexterity, in particular proper force sensing and control combined with spatial understanding. Video 2 above on the right shows that the required dexterity is not so much about the "end-effector form factor". Mainly only the manipulation strategy for pulling the rings up through the beam cannot rely on a sliding motion like a human hand. But this can be compensated by grasping through the beam from above.

High-Level Project Plan (as of now)

I might manage to get a foothold for a subproblem involving one, two or three rings with the following approach:

- Collect demonstrations in IsaacLab with an allegro hand attached to a Franka teleoperated with a mocap glove and an attached tracker for the wrist pose. See the project log of week 48/25 below for an initial result and the issues that need to be solved to reliably collect on the order of a few hundred demonstrations for one to two rings. The most important missing piece seems to be a foundation controller like Dexterity Gen (02/25). Unfortunately, this Meta FAIR paper does not publish the weights as it required substantial compute. But a custom version pretrained with basic ring grasp RL tasks might be sufficient.

- Train a BC policy, .e.g, with the mimic-one approach: Basically a visuomotor generative policy with a transformer architecture (pre-trained vision encoders), with a flow matching objective. It might be beneficial to further include force vectors at the EE as input.

- Then leveraging the confinement to simulation by steering the diffusion policy with latent space reinforcement learning. The agent might learn to make better use of tactile information to which the teleoperator is oblivious. Incentivizing the agent to learn a faster task execution might also be possible.

Alternative Project Paths

There are also ways to deal with the above issues that rethink the project more generally:

- Use the standard panda gripper in simulation instead of the allegro hand. Maybe buy the Inverse3 from Haply Robotics for force sensing ($5000-$6000). Ideal would be the sigma.7 from force dimension, but really expensive (55 to 110k British pounds)

- Do everything in real:

- Rebuild and use ManipForce device to collect data

- Use leader follower franka setup in lab

- Use kinodynamic motion planning for collecting data. Could also be an interesting project in itself to explore RRT-like algorithms through a differential simulation (via Nvidia warp).

Weekly Project Update Logs

Footnotes

The insights are: (i) The first ring can always be removed or attached to the beam. (ii) Any other ring can change its state if, and only if, the ring directly before it on the beam and all rings before that one are off the beam. (iii) From the first two insights it follows that at any given time are there exactly two possible moves. After the first move there is not really an exploration problem anymore since one of the two moves necessarily just reverses the previous move which does not get us anywhere. So basically the problem is to find out in which of the two possible directions to run. After that it is merely autopilot. (iv) We can reason about which direction is the right one with a top-down approach. To this end, let's number the rings from 1 to 9 and let's denote by [1...i] the state where in which 1 to i are off the beam and i+1 to 9 are on the beam. To get all rings off the beam we have to remove the ninth ring at some point which can be done only in state [1...7]. To get there we have to solve the subproblem [1...5] first. This in turn requires first solving [1...3], and before that [1...1]. So the first correct move is to remove the first ring first and then turn on autopilot. Instead one can of course also think of this problem as recursively solving subproblems. Fun fact: If we instead remove the second one first and then "go into that direction", we run into a dead end where only the last ring is on the beam and the only option is to rewind everything. ↩